Towards Victory

Dr. Geoffrey Louie's research at Oakland University looks to enhance military human-robot teamwork using immersive gaming technology for real-time simulations

There is a battlefield where soldiers seamlessly collaborate with autonomous robots while new cutting-edge technology is tested in real time. This is not a distant future. By blending immersive gaming environments with military-grade simulations, Geoffrey Louie Wing-Yue, Ph.D., distinguished associate professor of electrical and computer engineering, leads a group of OU, U.S. Army and industry researchers to revolutionize how the Army evaluates the next generation of autonomous systems.

In recent years, the complexity of military engagements has increased with the integration of cutting-edge autonomous technologies, such as ground robots, unmanned aerial vehicles and smart combat vehicles. Since soldiers are required to interact with these systems, it is important to evaluate the effectiveness of these technologies when integrated in human teams in real-world scenarios. However, traditional methods of evaluation are often limited by time, cost, diversity of test scenarios and the inability to observe all interactions in real time. Additionally, end-users (soldiers) are introduced to new technologies too late in the development process, which might lead to costly redesigns.

The U.S. Army’s Ground Vehicle Systems Center (GVSC) Immersive Simulation Directorate has long employed virtual experimentation as a core approach for advancing military technologies. By adapting networked gaming systems to the evaluation of future vehicle concepts and technology, the Army can assess how these innovations perform within unit formations (e.g., Platoon, Company) and work together to accomplish mission objectives. This framework served as the foundation for a recent partnership with Oakland University and industry partners.

“In 2022, the U.S. Army partnered with Oakland University and industry in search for more dynamic and scalable solutions that would add capabilities to the GVSC’s virtual experimentation platform. This prompted the development of virtual experimentation systems that leverage multi-user immersive gaming technologies,” explains Dr. Louie, the project's principal investigator.

SECS faculty members Drs. Jia Li, Shadi Alawaneh, Osamah Rawashdeh and Khalid Mirza, along with their graduate students, GVSC and NTT Data, are integral to this effort. Working across several OU laboratories such as the Real Life Intelligent Robotics Laboratory, Augmented Reality Center, GPU Computing Research Laboratory and Embedded Systems Research Laboratory, the team has developed technologies that improve the following platform capabilities: rapid prototyping of multi-user and multi-technology virtual simulations for experimentation; synchronization of company-level and individual/crew-level interactions with novel technologies during virtual experimentation; and development, analysis and evaluation of enabling technologies in virtual experiments.

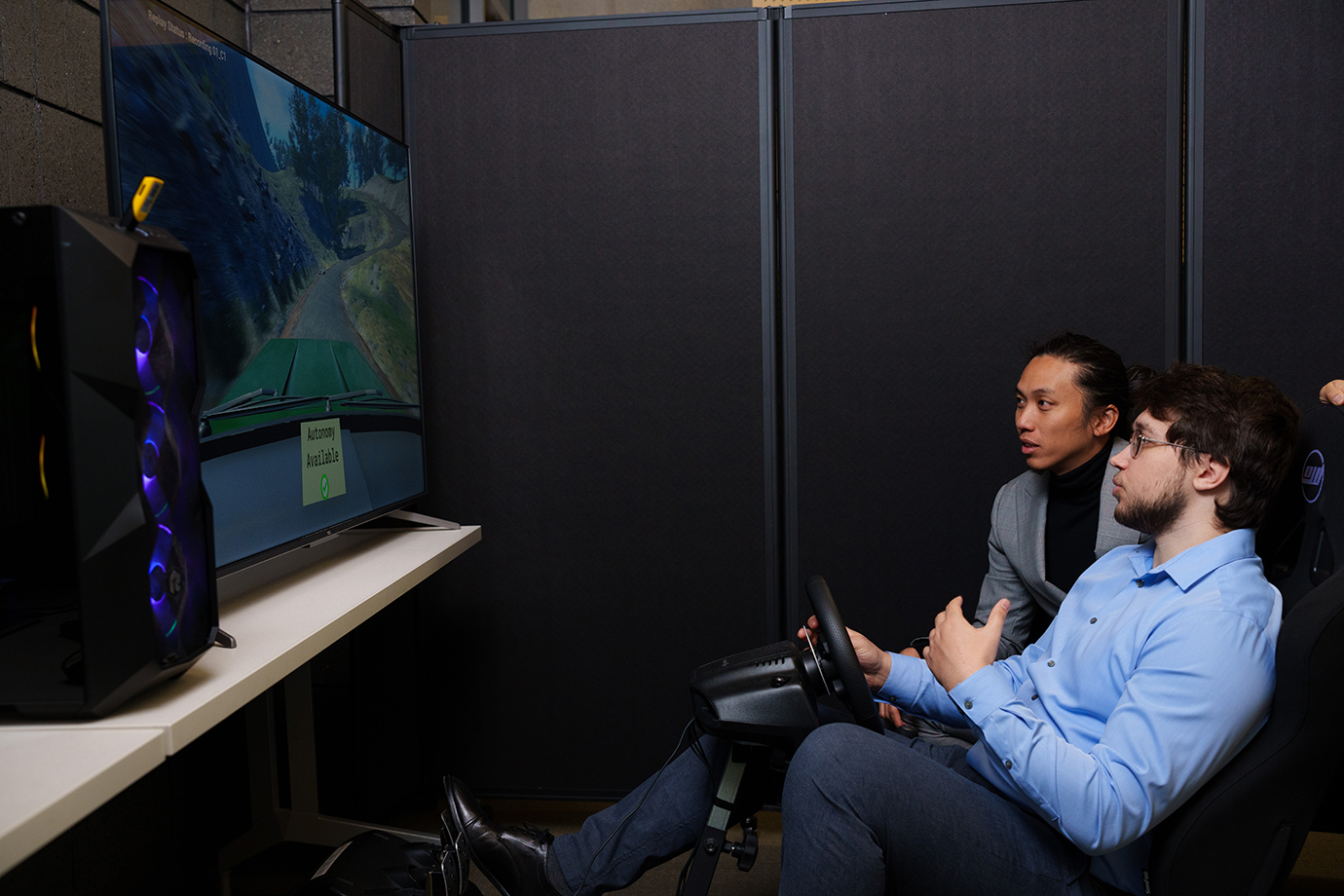

Not only does the enhanced platform reduce the time and costs associated with traditional prototype development but it also allows an increase in the depth of insights through the “spectator interface”—the key component of this new virtual experimentation system. The spectator interface enables external observers, such as engineers, researchers and military leaders, to view the interactions between soldiers and autonomous systems in real-time, overcoming a major hurdle of traditional military simulations.

"Our spectator system is designed to enhance the observation of virtual human-robot experiments. It tracks events in real time and automatically adjusts the camera to provide clear views of soldier positions and robot behaviors. Additionally, it presents statistics and graphs of collected data, allowing viewers to easily switch between different angles, replay important moments and support their observations with relevant stats. This comprehensive approach enables a deeper assessment of both soldier performance and the effectiveness of autonomous systems," says Sean Dallas, Ph.D. student in electrical and computer engineering.

To demonstrate the capabilities of this virtual experimentation platform, Dr. Louie and his team conducted a number of studies. One case study, for instance, involved human-robot teaming in a security scenario: a security guard working alongside two mobile robots to protect critical assets from intruders. Participants in this study operated within both virtual and physical environments, allowing researchers to compare the effectiveness of virtual experimentation against real-world conditions.

“The results were rather interesting: while the performance metrics were similar across both environments, there were significant behavioral differences. For example, in the virtual scenario, participants were more likely to stay in one location, as the lack of physical exertion made movement less of a consideration. Virtual participants also experienced lower spatial awareness, due to the limitations of proprioception in virtual environments,” says Dr. Louie.

These findings underscore the unique advantages of virtual experimentation in studying human behavior within complex scenarios, providing valuable insights for enhancing soldier-robot teamwork in real-world situations.

“This project promises significant advancements in the simulation and rapid evaluation of human-robot teaming using AR and VR technology. Beyond enhancing the capacity to evaluate complex systems in a controlled virtual setting, it also builds critical research skills for the university students and faculty involved. This dual focus on technology advancement and assessment, combined with capacity building, is crucial for fostering the next generation of innovators,” says Professor Rawashdeh, chair of the Department of Electrical and Computer Engineering.

The long-term vision for this research is to develop foundational approaches that utilize immersive gaming technologies to rapidly prototype and evaluate human-AI, human-robot and human-machine interactions. Dr. Wing-Yue plans to expand this work by forging collaborations with industry, academic organizations, and the federal and state governments in such areas as the healthcare, education, manufacturing, automotive and defense industries. For more information, please visit Dr. Wing-Yue’s website.

Distribution Statement A. Approved for public release; distribution is unlimited. OPSEC9207.

|

|---|

| Sean Dallas and Dr. Louie are developing a spectator system to improve the observation of virtual human-robot experiments. |

December 11, 2024

December 11, 2024

By Arina Bokas

By Arina Bokas